Perception is crucial for autonomous driving, but single-agent perception is often constrained by sensors' physical limitations, leading to degraded performance under severe occlusion, adverse weather conditions, and when detecting distant objects. Multi-agent collaborative perception offers a solution, yet challenges arise when integrating heterogeneous agents with varying model architectures. To address these challenges, we propose STAMP, a scalable task- and model-agnostic, collaborative perception pipeline for heterogeneous agents. STAMP utilizes lightweight adapter-reverter pairs to transform Bird's Eye View (BEV) features between agent-specific and shared protocol domains, enabling efficient feature sharing and fusion. This approach minimizes computational overhead, enhances scalability, and preserves model security. Experiments on simulated and real-world datasets demonstrate STAMP's comparable or superior accuracy to state-of-the-art models with significantly reduced computational costs. As a first-of-its-kind task- and model-agnostic framework, STAMP aims to advance research in scalable and secure mobility systems towards Level 5 autonomy.

Abstract

Pipeline Overview

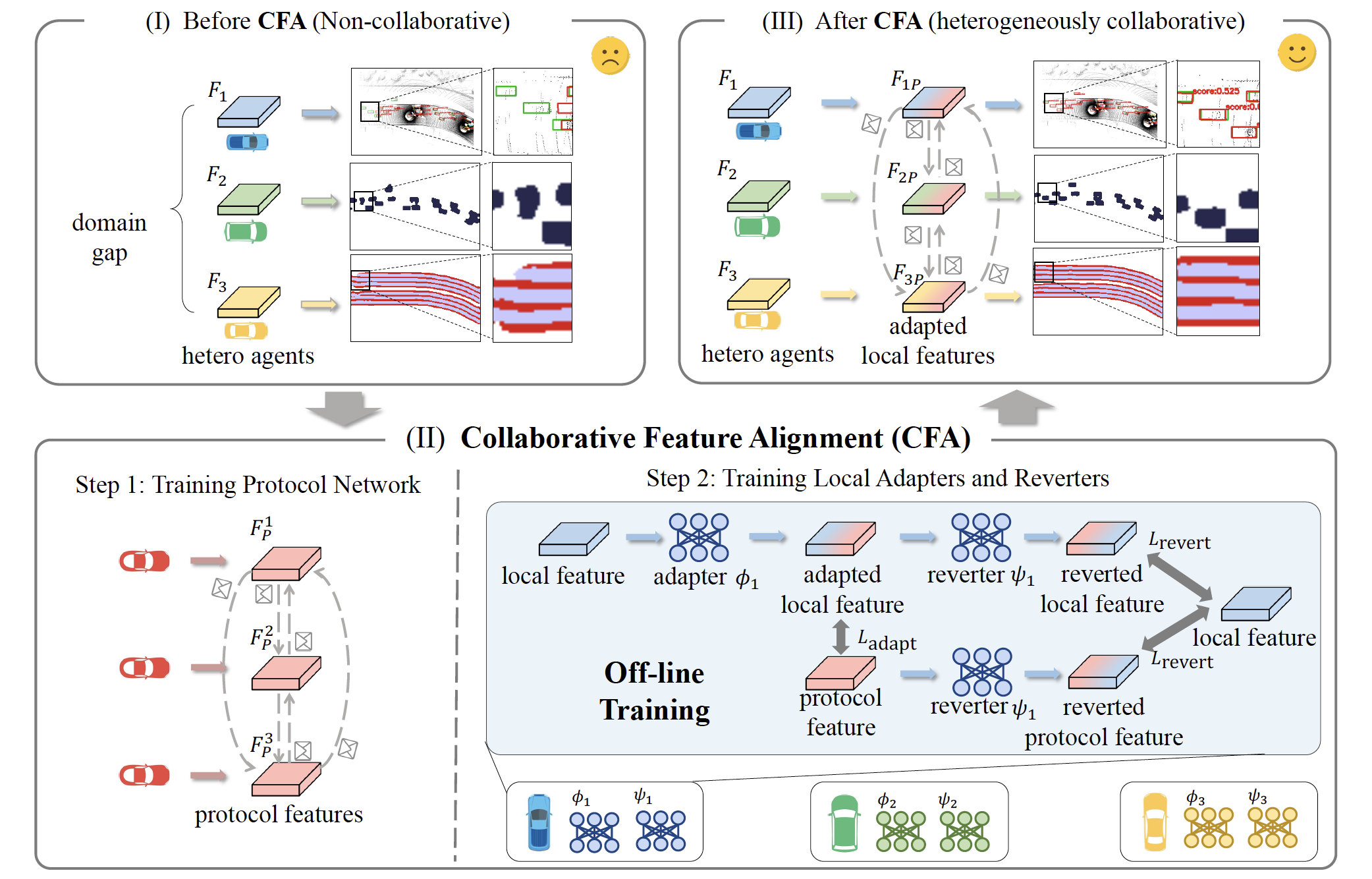

STAMP allows agents to share feature maps in the protocal representation and keep the original local feature maps secure.

- We train a protocol model to learn a protocol feature space.

- All agents' adapters and reverters are trained locally.

- Features of each local agent are adapted to the protocol feature space before being boardcast.

- Each local agent receives other agents' feature maps and reverts them to their own local feature spaces for fusion.

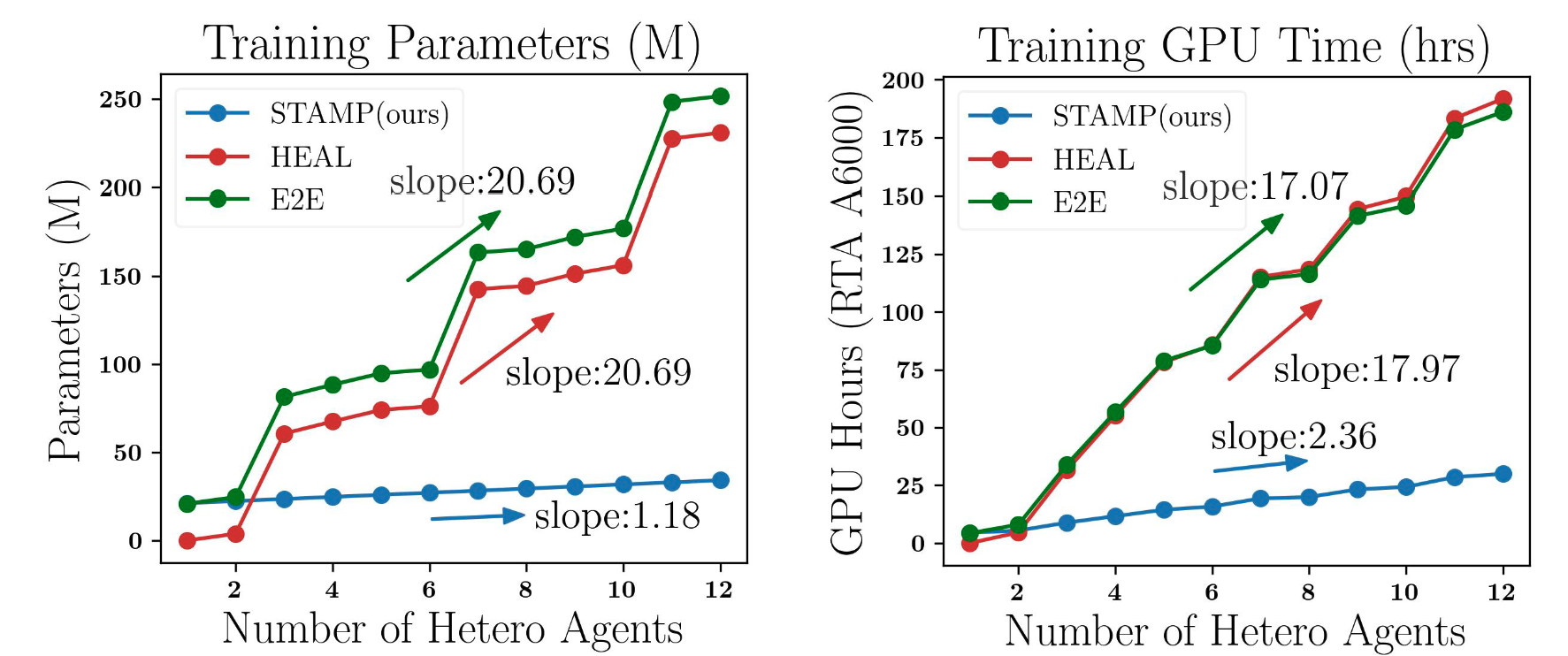

Training Efficiency

Training cost comparsion in the OPV2V dataset. in The changes in the number of training parameters and estimated training GPU hours as the number of heterogeneous agents increases from 1 to 12. End-to-end training and HEAL exhibit a steep increase in both parameters and GPU hours as the number of agents grows. In contrast, although our pipeline show higher parameters and GPU hours at the one or two number of agents (due to the training of the protocol model), it demonstrates a much slower growth rate because our proposed adapter reverter is very light-weighted and only takes 5 epochs to finish training. This highlights the scalability of our pipeline.

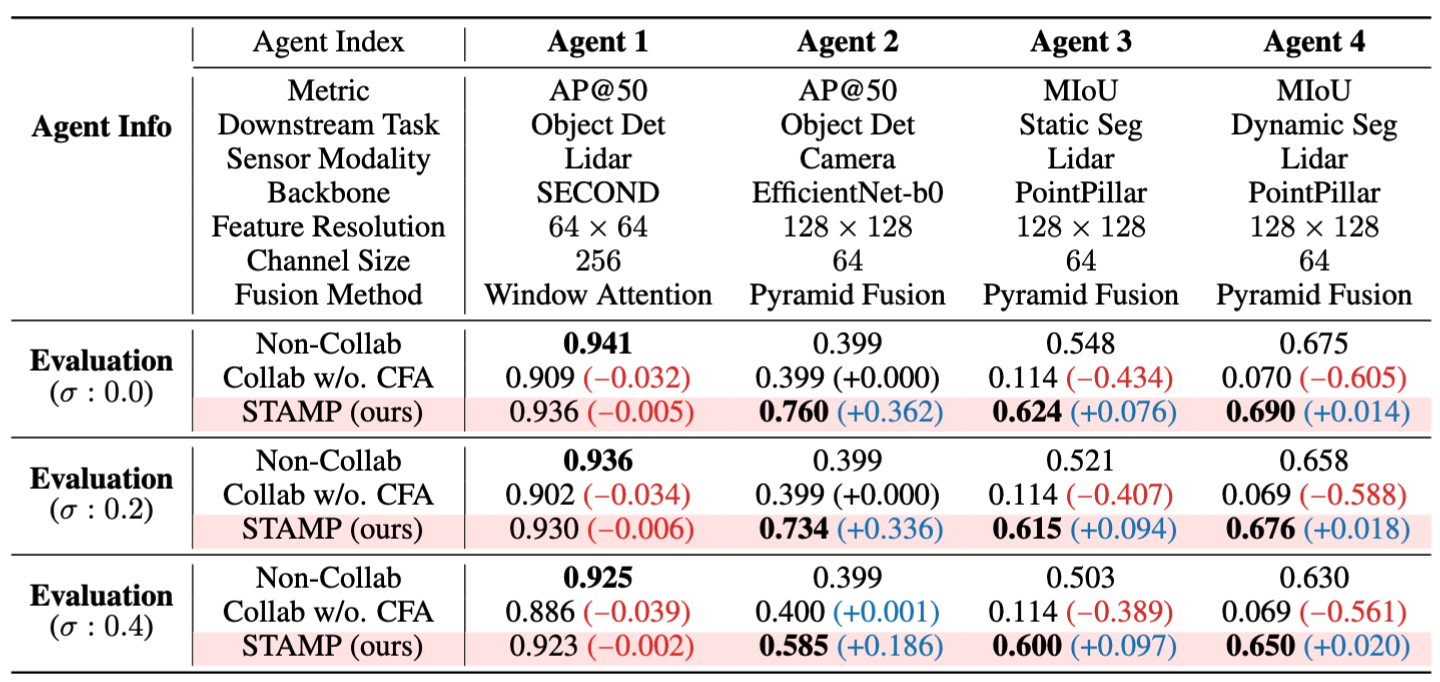

Performance in Task and Model-agnostic Collaborative Perception

Heterogeneous collaborative perception results in a model- and task-agnostic setting. Tasks include 3D object detection ('Object Det'), static object BEV segmentation ('Static Seg'), and dynamic object BEV segmentation ('Dynamic Seg'). 3D object detection is evaluated using Average Precision at 50\% IoU threshold (AP@50), while segmentation tasks use Mean Intersection over Union (MIoU). Our method consistently outperforms single-agent segmentation for agents 3 and 4 in the BEV segmentation task. For agent 2's camera-based 3D object detection, our pipeline achieves substantial gains (e.g., AP@50 improves from 0.399 to 0.760 in noiseless conditions) while collaboration without feature alignment shows negligible changes.

However, we observe that both collaborative approaches lead to performance degradation for Agent 1 compared to its single-agent baseline, despite our method outperforming collaboration without feature alignment. This unexpected outcome is attributed to Agent 2's limitations, which relies solely on less accurate camera sensors for 3D object detection. This scenario illustrates a bottleneck effect, where a weaker agent constrains the overall system performance, negatively impacting even the strongest agents. This challenge in multi-agent collaboration systems prompts us to introduce the concept of a \textbf{Multi-group Collaboration System}.

Our Vision

Our proposed STAMP framework effectively addresses these limitations, offering a scalable solution for multi-group collaborative perception. The key innovation lies in its lightweight adapter and reverter pair (approximately 1MB) required for each collaboration group an agent joins. This efficient design enables agents to equip multiple adapter-reverter pairs, facilitating seamless participation in various groups without significant computational overhead. The minimal memory footprint ensures scalability, even as agents join numerous collaboration groups, making STAMP particularly well-suited for multi-group and multi-model collaboration systems.

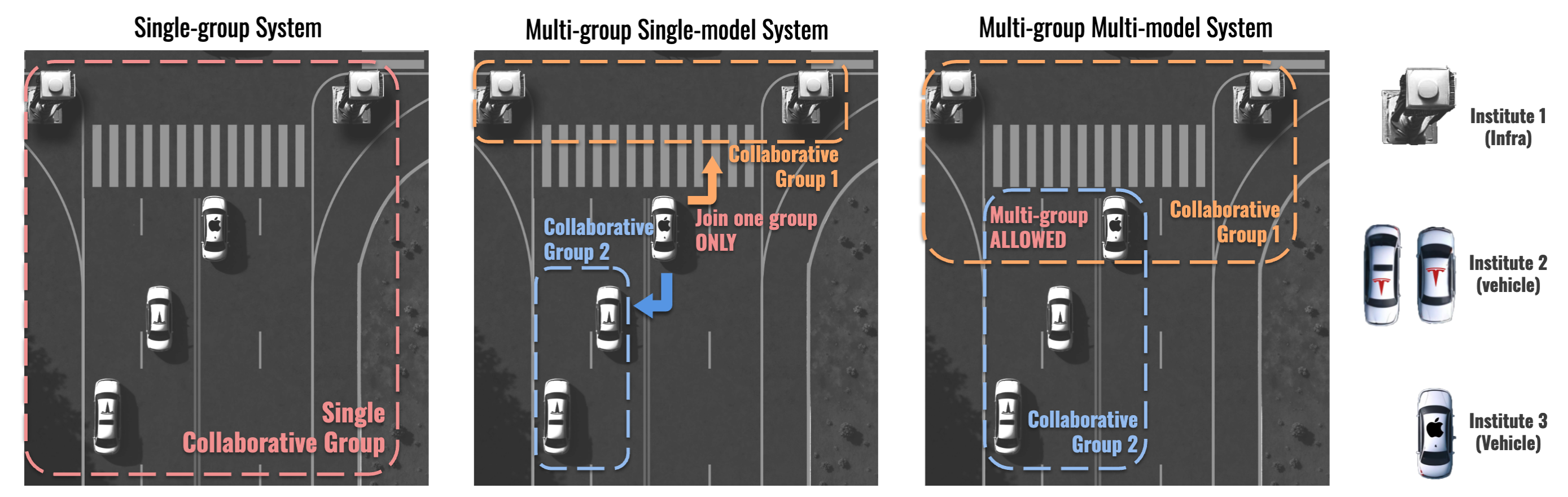

We can distinguish between three collaborative system types:

- Single-group systems, where agents either operate independently or are compelled to collaborate with all others, are susceptible to performance bottlenecks caused by inferior agents and vulnerabilities introduced by malicious attackers.

- Multi-group single-model systems, allowing multiple collaboration groups but restricting agents to a single group because each agent can only equip a single model.

- Multi-group multi-model systems enabling agents to join multiple groups if they meet the predefined standards.

The multi-group structure offers significant advantages over traditional single-group systems. It enhances agents' potential for diverse collaborations, consequently improving overall performance. This approach mitigates the bottleneck effect by allowing high-performing agents to maintain efficiency within groups of similar capability while potentially assisting less capable agents in other groups. Furthermore, it enhances system flexibility, enabling dynamic group formation based on specific task requirements or environmental conditions.

However, implementing such a multi-group system poses challenges for existing heterogeneous collaborative pipelines. End-to-end training approaches require simultaneous training of all models, conflicting with the concept of distinct collaboration groups. Methods that require separate encoders for each group become impractical as the number of groups increases due to computational and memory constraints.

Our proposed STAMP framework effectively addresses these limitations offering a scalable solution for multi-group collaborative perception. The key innovation lies in its lightweight adapter and reverter pair (approximately 1MB) required for each collaboration group an agent joins. This efficient design enables agents to equip multiple adapter-reverter pairs, facilitating seamless participation in various groups without significant computational overhead. The minimal memory footprint ensures scalability, even as agents join numerous collaboration groups, making STAMP particularly well-suited for multi-group and multi-model collaboration systems.